There is a lot of righteous anger directed toward Intel over CPU bugs that were revealed by Spectre/Meltdown. I agree that things could have been handled better, particularly with regards to transparency and the sharing of information among the relevant user communities that could have worked together to deploy effective patches in a timely fashion. People also aren’t wrong that consumer protection laws obligate manufacturers to honor warranties, particularly when a product is not fit for use as represented, if it contains defective material or workmanship, or fails to meet regulatory compliance.

However, as an open source hardware optimist, and someone who someday aspires to see more open source silicon on the market, I want to highlight that demanding Intel return, exchange, or offer rebates on CPUs purchased within a reasonable warranty period is entirely at odds with demands that Intel act with greater transparency in sharing bugs and source code.

Transparency is Easy When There’s No Penalty for Bugs

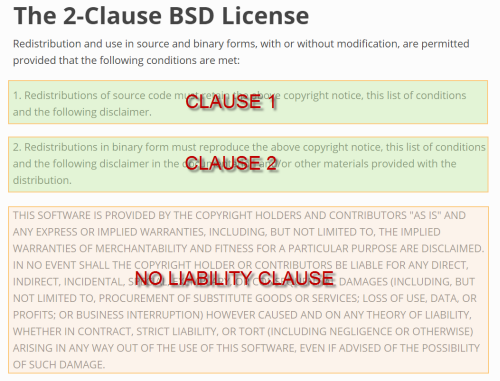

It’s taken as motherhood and apple pie in the open source software community that transparency leads to better products. The more eyes staring at a code base, the more bugs that can be found and patched. However, a crucial difference between open source software and hardware is that open source software carries absolutely no warranty. Even the most minimal, stripped down OSS licenses stipulate that contributors carry no liability. For example, the BSD 2-clause license has 189 words, of which 116 (60%) are dedicated to a “no warranty” clause – and all in caps, in case you weren’t paying attention. The no-warranty clause is so core to any open source license it doesn’t even count as a clause in the 2-clause license.

Of course contributors have no liability: this lack of liability is fundamental to open source. If people could sue you for some crappy code you you pushed to github years ago, why would you share anything? Github would be a ticking time bomb of financial ruin for every developer.

It’s also not about code being easier to patch than hardware. The point is that you don’t have to patch your code, even if you could. Someone can file a bug against you, and you have the legal right to ignore it. And if your code library happens to contain an overflow bug that results in a house catching fire, you walk away scot-free because your code came with no warranty of fitness for any purpose whatsoever.

Oohh, Shiny and New!

Presented a bin of apples, most will pick a blemish-free fruit from the bushel before heading to the check-out counter. Despite the knowing the reality of nature – that every fruit must grow from a blossom under varying conditions and hardships – we believe our hard-earned money should only go toward the most perfect of the lot. This feeling is so common sense that it’s codified in the form of consumer protection laws and compulsory warranties.

This psychology extends beyond obvious blemishes, to defects that have no impact on function. Suppose you’re on the market to buy a one-slot toaster. You’re offered two options: a one-slot toaster, and a two-slot toaster but with the left slot permanently and safely disabled. Both are exactly the same price. Which one do you buy?

Most people would buy the toaster with one slot, even though the net function might be identical to the two-slot version where one slot is disabled. In fact, you’d probably be infuriated and demand your money back if you bought the one-slot toaster, but opened the box to find a two-slot toaster with one slot disabled. We don’t like the idea of being sold goods that have anything wrong with them, even if the broken piece is irrelevant to performance of the device. It’s perceived as evidence of shoddy workmanship and quality control issues.

News Flash: Complex Systems are Buggy!

Hold your breath – I’d wager that every computer you’ve bought in the past decade has broken parts inside of them, almost exactly like the two-slot toaster with one slot permanently disabled. There’s the set of features that were intended to be in your chips – and there’s the subset of series of features that finally shipped. What happened to the features that weren’t shipped? Surely, they did a final pass on the chip to remove all that “dead silicon”.

Nope – most of the time those partially or non-functional units are simply disabled. This ranges from blocks of cache RAM, to whole CPU cores, to various hardware peripherals. Patching a complex chip design can cost millions of dollars and takes weeks or even months, so no company can afford to do a final “clean-up” pass to create a “perfect” design. To wit, manufacturers never misrepresent the product to consumers – if half the cache was available, the spec sheet would simply report the cache size as 128kB instead of 256kB. But surely some customers would have complained bitterly if they knew of the defect sold to them.

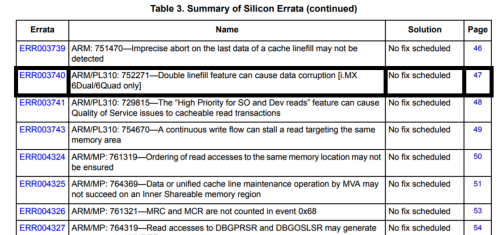

Despite being chock full of bugs, vendors of desktop CPUs or mobile phone System on Chips (SoCs) rarely disclose these bugs to users – and those that do disclose almost always disclose a limited list of public bugs, backed by an NDA-only list of all the bugs. The top two reasons cited for keeping chip specs secret are competitive advantage and liability, and I suspect in reality, it’s the latter that drives the secrecy, because the crappier the chipset, the more likely the specs are under NDA. Chip vendors are deathly afraid users will find inconsistencies between the chip’s actual performance and the published specs, thus triggering a recall event. This fear may seem more rational if you consider the magnitude of Intel’s FDIV bug recall ($475 million in 1994).

This is a pretty typical list of SoC bugs, known as “errata”. If your SoC’s errata is much shorter than this, it’s more likely due to bugs not being disclosed than there actually being less bugs.

If you Want Messages, Stop Shooting the Messengers

Highly esteemed and enlightened colleagues of mine are strongly of the opinion that Intel should reimburse end users for bugs found in their silicon; yet in the same breath, they complain that Intel has not been transparent enough. The point that has become clear to me is that consumers, even open-source activists, are very sensitive to imperfections, however minor. They demand a “perfect” machine; if they spend $500 on a computer, every part inside better damn well be perfect. And so starts the vicious cycle of hardware manufacturers hiding all sorts of blemishes and shortcomings behind various NDAs, enabling them to bill their goods as perfect for use.

You can’t have it both ways: the whole point of transparency is to enable peer review, so you can find and fix bugs more quickly. But if every time a bug is found, a manufacturer had to hand $50 to every user of their product as a concession for the bug, they would quickly go out of business. This partially answers the question why we don’t see open hardware much beyond simple breakout boards and embedded controllers: it’s far too risky from a liability standpoint to openly share the documentation for complex systems under these circumstances.

To simply say, “but hardware manufacturers should ship perfect products because they are taking my money, and my code can be buggy because it’s free of charge” – is naïve. A modern OS has tens of millions of lines of code, yet it benefits from the fact that every line of code can be replicated perfectly. Contrast to a modern CPU with billions of transistors, each with slightly different electrical characteristics. We should all be more surprised that it took so long for a major hardware bug to be found, than the fact that one was ever found.

Complex systems have bugs. Any system with primitives measured in the millions or billions – be it lines of code, rivets, or transistors – is going to have subtle, if not blatant, flaws. Systems simple enough to formally verify are typically too simple to handle real-world tasks, so engineers must rely on heuristics like design rules and lots and lots of hand-written tests.

There will be bugs.

Realities of the Open Hardware Business

About a year ago, I had a heated debate with a SiFive founder about how open they can get about their documentation. SiFive markets the RISC-V CPU, billed as an “open source CPU”, and many open source enthusiasts got excited about the prospect of a fully-open SoC that could finally eliminate proprietary blobs from the boot chain and ultimately through the same process of peer review found in the open source software world, yield a more secure, trustable hardware environment.

However, even one of their most ardent open-source advocates pushed back quite hard when I suggested they should share their pre-boot code. By pre-boot code, I’m not talking about the little ROM blob that gets run after reset to set up your peripherals so you can pull your bootloader from SD card or SSD. That part was a no-brainer to share. I’m talking about the code that gets run before the architecturally guaranteed “reset vector”. A number of software developers (and alarmingly, some security experts) believe that the life of a CPU begins at the reset vector. In fact, there’s often a significant body of code that gets executed on a CPU to set things up to meet the architectural guarantees of a hard reset – bringing all the registers to their reset state, tuning clock generators, gating peripherals, and so forth. Critically, chip makers heavily rely upon this pre-boot code to also patch all kinds of embarrassing silicon bugs, and to enforce binning rules.

The gentleman with whom I was debating the disclosure of pre-boot code adamantly held that it was not commercially viable to share the pre-boot code. I didn’t understand his point until I witnessed open-source activists en masse demanding their pound of flesh for Intel’s mistakes.

As engineers, we should know better: no complex system is perfect. We’ve all shipped bugs, yet when it comes to buying our own hardware, we individually convince ourselves that perfection is a reasonable standard.

The Choice: Truthful Mistakes or Fake Perfection?

The open source community could use the Spectre/Meltdown crisis as an opportunity to reform the status quo. Instead of suing Intel for money, what if we sue Intel for documentation? If documentation and transparency have real value, then this is a chance to finally put that value in economic terms that Intel shareholders can understand. I propose a bargain somewhere along these lines: if Intel releases comprehensive microarchitectural hardware design specifications, microcode, firmware, and all software source code (e.g. for AMT/ME) so that the community can band together to hammer out any other security bugs hiding in their hardware, then Intel is absolved of any payouts related to the Spectre/Meltdown exploits.

This also sets a healthy precedent for open hardware. In broader terms, my proposed open hardware bargain is thus: Here’s the design source for my hardware product. By purchasing my product, you’ve warranted that you’ve reviewed the available design source and decided the open source elements, as-is, are fit for your application. So long as I deliver a product consistent with the design source, I’ve met my hardware warranty obligation on the open source elements.

In other words, the open-source bargain for hardware needs to be a two-way street. The bargain I set forth above:

- Rewards transparency with indemnity against yet-to-be-discovered bugs in the design source

- Burdens any residual proprietary elements with the full liability of fitness for purpose

- Simultaneously conserves a guarantee that a product is free from defects in materials and workmanship in either case

The beauty of this bargain is it gives a real economic benefit to transparency, which is exactly the kind of wedge needed to drive closed-source silicon vendors to finally share their full design documentation, with little reduction of consumer protection.

So, if we really desire a more transparent, open world in hardware: give hardware makers big and small the option to settle warranty disputes for documentation instead of cash.

Author’s Addendum (added Feb 2 14:47 SGT)

This post has 2 aspects to it:

The first is whether hardware makers will accept the offer to provide documentation in lieu of liability.

The second, and perhaps more significant, is whether you would make the offer for design documentation in lieu of design liability in the first place. It’s important that companies who choose transparency be given a measurable economic advantage over those who choose obscurity. In order for the vicious cycle of proprietary hardware to be broken, both consumer and producer have to express a willingness to value openness.

[…] Spectre/Meltdown Pits Transparency Against Liability 2 by beardicus | 0 comments on Hacker News. […]

[…] Article URL: https://www.bunniestudios.com/blog/?p=5127 […]

“I propose a bargain somewhere along these lines: if Intel releases comprehensive microarchitectural hardware design specifications, microcode, firmware, and all software source code (e.g. for AMT/ME)”

That is a wonderful suggestion, but it has about a 0% chance of becoming reality. Intel will risk a global recall of their chips before they would ever hand over this information.

Not only because the MBAs will be screaming “proprietary information” until they’re blue in the face, but also because I suspect it would open Intel up to about a million patent lawsuits.

This is why we can’t have nice things.

The question was not whether Intel would accept the settlement for documentation. I am aware of that being a tall ask on their part.

The question was whether /you/ would offer it. It’s more important that the offer is on the table as an option, so that companies which choose transparency can be given a measurable economic advantage over those who choose obscurity.

In order for the vicious cycle of proprietary hardware to be broken, both consumer and producer have to create opportunities for valuing openness.

> The question was whether /you/ would offer it. It’s more important that the offer is on the table as an option, so that companies which choose transparency can be given a measurable economic advantage over those who choose obscurity.

Yes, I would offer/accept open documentation in lieu of monetary compensation. I, of course, speak for no purchasing department of a Fortune 100 company, so no one is likely to listen to me.

I agree 100% with your proposal that companies should be offered an alternative to costly lawsuits by agreeing to release detailed specifications and documentation if you (the customer) agree not to sue us.

Yup, done! Where is my documentation? I can show myself out.

[…] corbet Here’s a blog post from bunnie Huang on the tension between transparency and product liability around hardware flaws. “The open […]

My own view is that Spectre and Meltdown are a new thing: attacks on microarchitecture plus exploitation of side channels. I think side channels are an unsolved problem in security in general. Requirements for secure systems only limit the side channel bandwidth – no one knows how to eliminate them.

This is just another proof that multilevel secure systems are, if not impossible, really really hard.

I am not even sure that Spectre is a design defect at all. It’s as though someone found out you could induce epilepsy with a blinking traffic light and blamed it on Ford. “They should have known not to include a window!” Future models will have electroptic dimmers in the glass but you will need a new car. It is a new threat.

Sharing the branch predictor state between security domains is a design defect. If the branch predictor was per-thread and swapped with a context switch / change in ring level, I doubt this would be exploitable.

That’s Variant 2. Variant 3 is a design defect too. The problem one is Variant 1. How do you guard against the observability of speculative execution in general? Even enforcing a complete microarchitectural reset when switching contexts (way, way too expensive) won’t fix it, because that one is potentially exploitable remotely, from a remote procedure call or even over the network, if the software conditions align right.

[…] Read More […]

I think there’s another nuance to Spectre/Meltdown, which is that I’m personally hard-pressed to call these bugs/errata at all, as I’m not aware that specification guarantees were made regarding side-channel communication timing vulnerabilities! This puts them in a class apart from FDIV.

These vulnerabilities represent a whole class of conceptual security weaknesses based on the unforeseen implications of how we’ve been building high-performance CPUs for more than two decades. It is simply not possible to unwind that, and there are surely many more similar problems to be realized in the coming year.

The argument of liability might be more interesting in the case of a crypto-coprocessor being vulnerable to differential power analysis, but even then it’d be hard to make a convincing case before researchers (outside of, perhaps, three-letter agencies) were aware of the possible form of attack.

I do wish for the sort of more open world of processors you describe, but I agree with other commenters that this is far out of reach. The hypothetical liability side of things is only one small aspect of the reason for close systems.

If you create anything, physical or intellectual property and suggest that others should use it, paid or not, then you should be held liable to an appropriate extent.

Lawyers are able to do pro bono work, whilst still being held liable for professional misconduct. Lawyers are allowed to fail and provided they are honest and they are working to the qualities they have advertised they have, then who they represent has to trust them.

We need conducts that we should work by and to classify open source contributions by the conducts that they have been created by.

Then software developers can be held liable and should be protected by an insurance fund to pay for limited damages ($1m per dev? – I already have £2m liability for my software contributions, so perhaps it could be higher).

Then like doctors, if we screw up, people can sue us for damages. So others know they can make a claim and if we act without appropriate conduct, then we can lose our access to insurance and in severe cases, lose the ability to publish software. We should have fixed premiums that are preserved after damage claims if there is no evidence of misconduct.

This is in theory pretty easy to achieve… the harder part is the conducts… but if other industries can do it (law, medicine, accountancy, etc) which are often far harder than ours, then it should be easy to draft up a Good Software Practice.

We can apply a matrix to work out what conducts are needed for a project.

If a consumer needs an elevated quality, then they have to do their own review and QA to meet that standards (a bit like vanilla linux to Redhat hardened kernel).

Like Food and Drugs, we should see the quick stuff we digest daily and from trusted ingredients be easy to create and turn around quickly (like food in a kitchen: news websites and games).

However, the complex parts that has the highest risks, should be surrounded by process heavy work to assure the quality, like a 10 year drug project – appropriate for Operating Systems from scratch, Car Automation software, etc.

In clinical software, this requirement is actually already in place (inherited from ICH GCP).

So let’s do it everywhere and create trust in open source and proprietary code.

My biggest fear if I contribute to an open source project and someone suffers, is that they cannot sue me when I caused them harm… so I really want to be held liable and protected by a professional industry structure that guarantee I can continue to work too.

The best bit for big open source projects (like Linux Kernel) is that as the free software gets bigger, the financial liability per developer’s insurance policy get smaller?

So I think the solution is the open source and transparency is the only acceptable shield from liability.

Dan Greer proposed this in his Blackhat talk. https://blog.valbonne-consulting.com/2015/07/24/cybersecurity-as-realpolitik-dan-greer/

“but also because I suspect it would open Intel up to about a million patent lawsuits.”

Them and everyone else. A guy that taught me a lot about hardware said basically everyone was obfuscating both to make their IP last longer against cloners and to hide from patent suits. He said there were so many on microarchitecture that it might be either hard or impossible to build a compatible product without infringing on something. Especially what a big player has. His employer refused to sell to American buyers as added risk reduction.

So, he spent a lot of his time either obfuscating employers’ IP or reverse engineering that of 3rd parties. He told me ChipWorks was big on teardowns to find IP theft for big, patent holders. Finally, he said one time a competitor cloned his ASIC’s down to the transistor level. So, it’s a crazy game in hardware that involves many people who will take your money. clone your IP, or try to shut down your business to stay monopolies or oligopolies.

The ‘ole adage,

If it works don’t screw with it!

If you screw with it and break it than,

You screw the Mfgr out of multiples of orig cost !$$!.

Interesting that a processor(s) running in the GHZ can

execute instructions and data to cycles the human mind

cannot comprehend without really making an error, but it

takes a human to screw with it and it than seeks revenge by

spilling out your private parts for the world to see!

Than in the end, either way, you throw it out. After a few million

years or so, it becomes a reborn silicon, the story repeats.

Moral: Don’t screw with me or I’ll screw you!

Really now, like everything else, it’s a people problem.

It’s hard coded in our DNA for better or worse.

PS: NO Offense implied Bunnie and All, evil is the absence of good,

The Digital Fifth is Bipolar so is Civilization. /ˌ$ivələˈzā$H(ə)n/

But my Intel and AMD stocks ascended! Can’t wait for large scale AI.

[…] Recommended Read: Spectre/Meltdown Pits Transparency Against Liability: Which is More Important to Y… from Tumblr https://chrisshort.tumblr.com/post/170424022847 via IFTTT […]

Less punishment leads to more transparency … this has been proven in the aviation world.

When pilots and other crews are not afraid to report that XYZ happened during their flight then the technical community has a chance to look at it and identify root causes. That leads to better software, better hardware, better communication, and better procedures. And that leads to more safety.

s/safety/bugs/g. It works.

In my opinion, no contributor to this topic seems to understand that he or she is but a fish swimming in the waters of capitalism and private-property fetishism.

Leon Trotsky, who founded the Fourth International (after the historic betrayals of the Stalinized Comintern), had this to say on “business secrets”:

“The accounts kept between the individual capitalist and society remain [in bourgeois society] the secret of the capitalist: they are not the concern of society. The motivation offered for the principle of business ‘secrets’ is ostensibly, as in the epoch of liberal capitalism, that of ‘free competition.’ In reality, the trusts keep no secrets from one another. The business secrets of the present epoch are part of a persistent plot of monopoly capitalism against the interests of society. Projects for limiting the autocracy of ‘economic royalists‘ will continue to be pathetic farces as long as private owners of the social means of production can hide from producers and consumers the machinations of exploitation, robbery and fraud. The abolition of ‘business secrets’ is the first step toward actual control of industry.

“Workers no less than capitalists have the right to know the ‘secrets‘ of the factory, of the trust, of the whole branch of industry, of the national economy as a whole. First and foremost, banks, heavy industry and centralized transport should be placed under an observation glass.

Leon Trotsky, “The Transitional Program,” 1938

I claim that Trotsky’s attitude toward heavy industry and transport, as he expressed it eighty years ago, is precisely what open-source advocates are groping for right now. But until the movement of workers in every industry turn on the lights, nothing will improve. The barons of capitalism will not cease defending their “property rights.” The rest of us be damned.

Thanks for the article, I am glad that this message from you reaches many people.

The best way to prevent liability is to provide complete transparency. Is there a way to improve this?

Bunnie, this sounds like you are calling for something similar to a liability patent concept. Whereas traditional patents provide for a period of exclusivity in exchange for documenting an “invention”, in this case the payoff would be limited liability.

I can see how it would work in many specific cases. But in general I seriously doubt that a balance can be struck on what limited liability really can be. If it’s too broad, it’s unlikely to pass because too many would argue it encourages poor designs. If it’s too narrow, the concept fails because there is no payoff for documentation. And the perfect middle ground is likely very narrow with little or no equilibrium.

So it might be easier to nibble around the edges of the issue. E.g. the right-to-repair laws that relate to things like automobiles in some US states. Perhaps a right-to-hack law might even pass in a place like California…?

Bunnie, I think the issues that Meltdown/Spectre bring are far beyond the issue of having more documentation on the internals of CPUs and other devices. I believe that Meltdown/Spectre are not merely bugs but the result of the way computers have been designed, specially with the dominance of x86 in lieu of other architectures. It is much more an issue on the way computer architecture research has been treated for the last 30 years, as a proposer to fixes (I don’t want to say bodges…) to commercial CPU architectures. Speculative execution is one of these fixes: it overcomplicates already complex architectures (1400+ instructions) to extract performance through cheap transistors (thanks Moore’s Law).

To some extent, ever smaller and cheap transistors killed research in fundamental CPU architecture. But that also allows us now (since transistors are cheap and won’t get any faster) to find better solutions, and for that open-source hardware should be a great enabler.

RISC-V is a great example of bringing back the discussion on alternative architectures, even with limitations on its open-sourceness, from a commercial point of view. My hopes and efforts are that we could have open-source hardware as way for having efficient, lean CPUs, that are also open for scrutiny and could be fundamentally improved over time, which is the opposite of what has been happening, with the dominance of a few companies and a single computing paradigm.

The core argument is interesting but deeply flawed in its assumptions and presented evidence. Products sold are judged on the claims made for purpose. If the manufacturers claim the cpu is flawless, that is their mistake.

The alternatives are not binary artificial pairs of forgiveness and progress or harsh penalties and secretiveness.

An anecdote from a different industry, for comparison. In California many building developers fear building condominiums because any flaws expose them to legal risk. Yet our brand new buildings are built to a shoddy standard which nearly guarantees flaws.

Contrast with Japan, where large scale developments go up non stop, and at high precision and with few flaws. Yet there are severe penalties for mistakes. A large and expensive building in a neighborhood I used to work in, was within months of move-in readiness when the entire building site stopped progressing and then spent the next year or more preparing to demolish their work because a building code mistake had been made that could not be fixed.

Our industry’s cowboy mentality only succeeds so far because it is so difficult to detect the flaws. But maybe that means we just need to get better at designing systems that are fault tolerant and, to borrow a phrase, “anti-fragile”.

Reading into cache irrespective of memory access permissions is obviously risky. With such risks come the inherent costs of those risks failing.

You seem to be reporting from a universe in which OpenSPARC hasn’t existed for over a decade. Are there any other peculiarities we may find interesting?

A really interesting idea, but I don’t see how existing laws could support this offer, nor how lawmakers would agree to change the law so that it does.

Let’s say I design and sell an IoT toaster-oven. I use the concept outlined in the post, and open all my SW and HW to be fully open-source, in exchange for non-liability. Now, I had a bug in my code that turns the oven on for hours at a time, at the middle of the night, if some obscure corner-case condition is met. It leads to 13 house fires, in which 7 people die. Now I come to my trial, and basically say, “I’m very sorry for this, but it’s not my problem. The product was fully open-source. I have no liability here. My thoughts and prayers are with the families of the dead and injured”.

I am not a lawyer and don’t even claim to be knowledgeable about these matters, but in my opinion, common sense says I will be definitely held fully liable. Furthermore, I can’t imagine any lawmaker agreeing to change the law so that I won’t be liable in such a case.

Granted, this is an extreme example, but even if the damage was only in property and not in life, I still can’t see the ‘non-liability’ sticking, nor any lawmaker daring to change the law so it would. So, can we (or should we), as part of the open source community, make this offer to hardware companies, considering that the chances this offer actually holds in court are slim?

Meanwhile, IBM with POWER9 has had no problem sharing all of the firmware source code as part of OpenPOWER – including all the code that’s run before the first POWER9 core is even up and running executing firmware out of L3 cache. Also, please do send us patches to firmware – we do accept contributions (it’s all on GitHub – github.com/open-power ).

There’s also a company making a Micro-ATX board and bundling it with a 4 core POWER9 CPU for a special of under $1k USD (see https://raptorcs.com/content/BK1B01/intro.html ) which as someone who’s worked on this stuff for nearly 5 years now is *really* happy about being able to be able to point to (and buy). Additionally, it ships with OpenBMC, so the software on the service processor is also all open.

So, apart from the source to the silicon itself, and a few blobs in accessories like the NIC and HDD/SSD – you’ve got more source access and freedom preserving hardware than any other modern desktop system (at least that I can find – and I’ll be happily proved wrong).