Making and breaking security go hand in hand. I’ve talked a lot about how Precursor, a mobile hardware development platform for secure applications, was made. In this post, I try to break it.

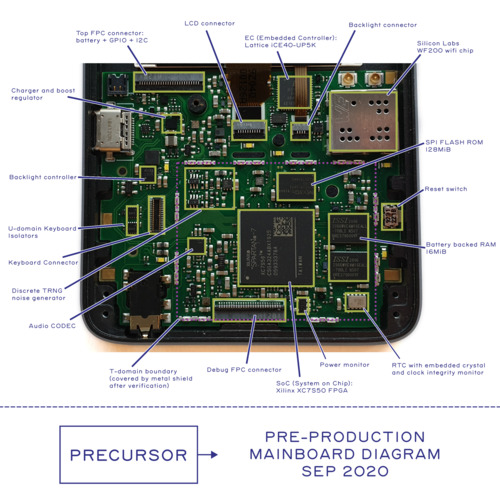

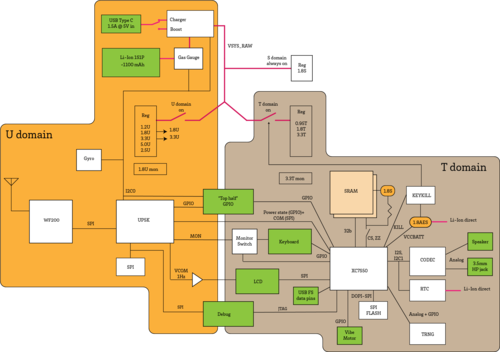

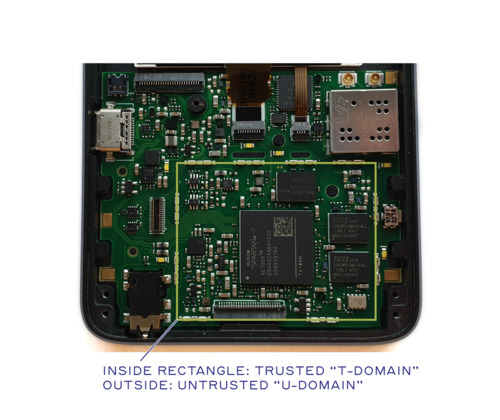

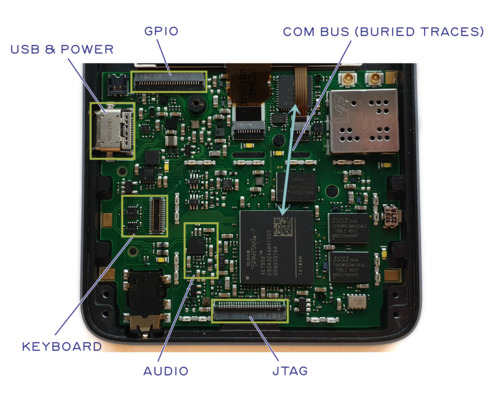

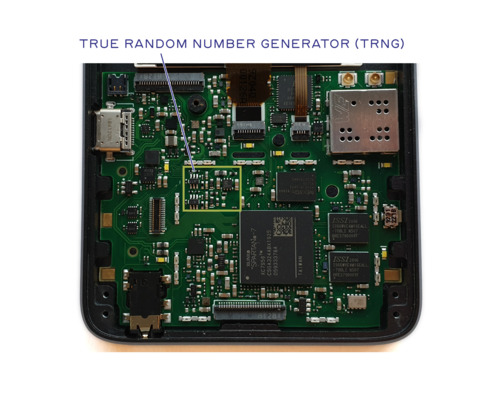

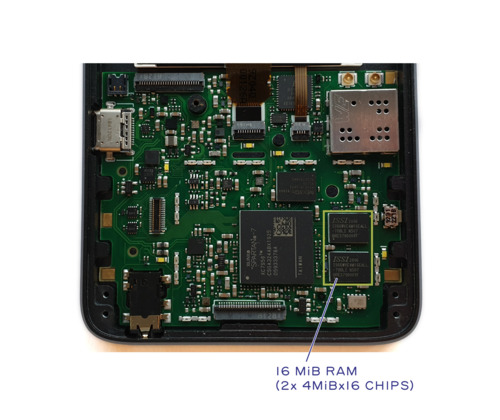

Hardware security is a multi-faceted problem. First, there is the question of “can I trust this piece of hardware was built correctly?”; specifically, are there implants and back doors buried in the hardware? We refer to this as the “supply chain problem”. It is a particularly challenging problem, given the global nature of our supply chains, with parts pulled from the four corners of the world, passing through hundreds of hands before reaching our doorstep. Precursor addresses this problem head-on with open, verifiable hardware: the keyboard, display, and motherboard are easy to access and visually inspect for correct construction. No factory or third-party tool is ever trusted with secret material. Precursor is capable of generating its own secret keys and sealing them within the hardware, without additional tools.

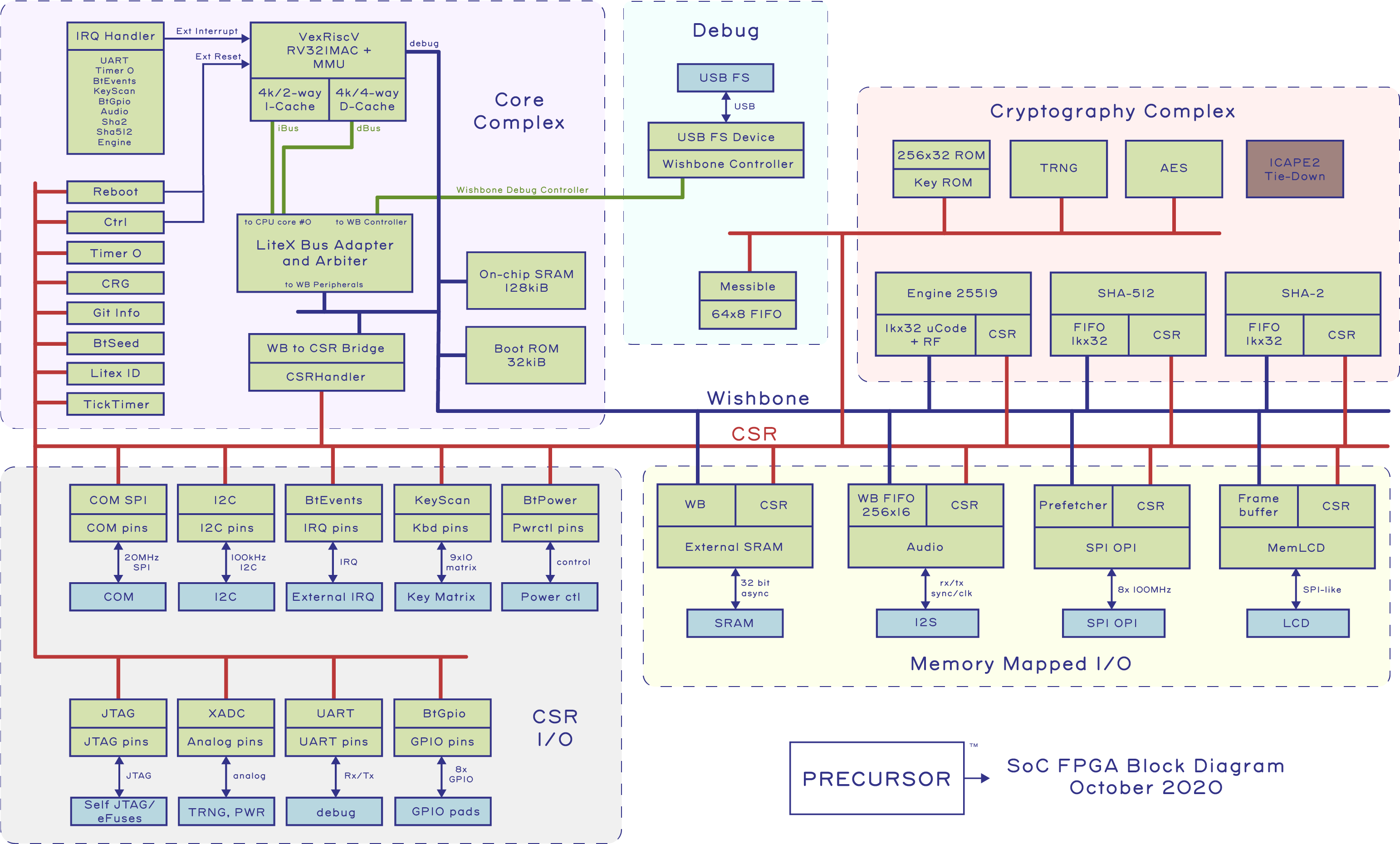

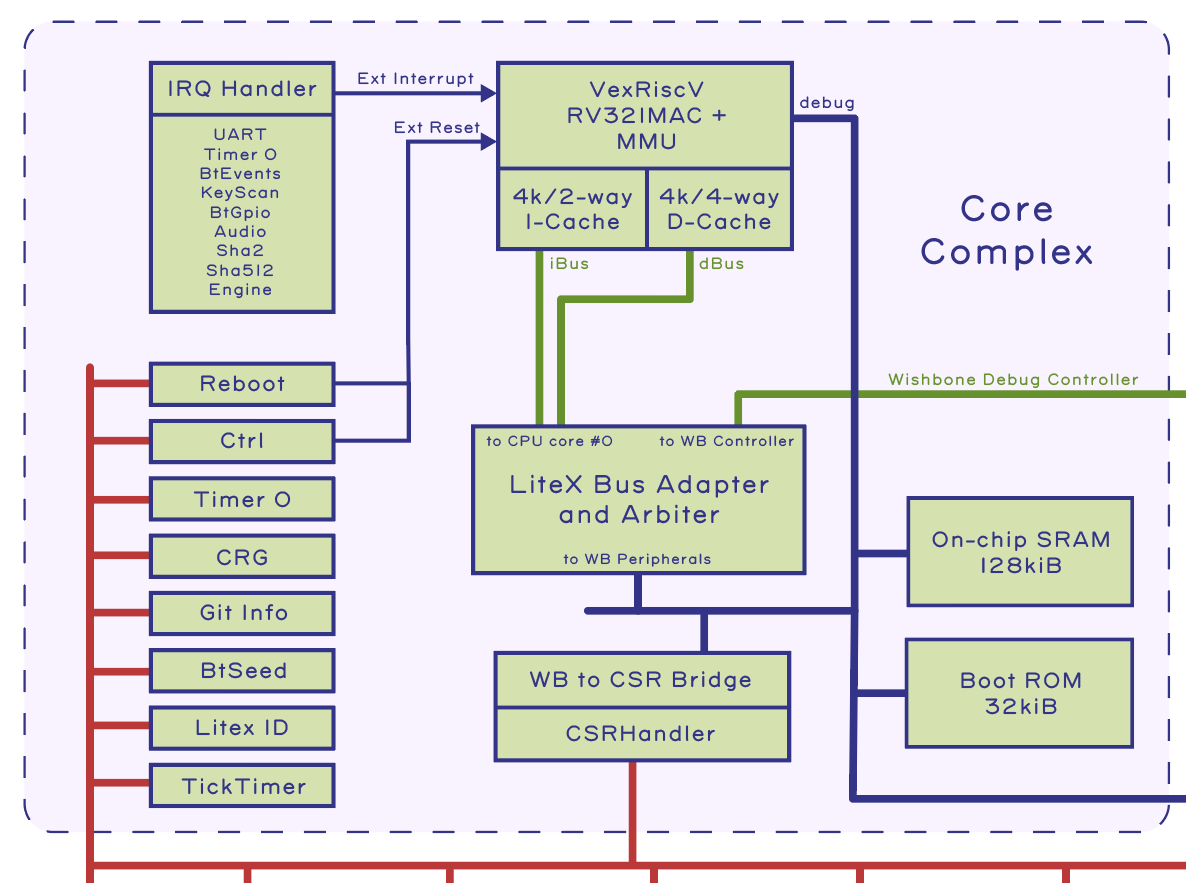

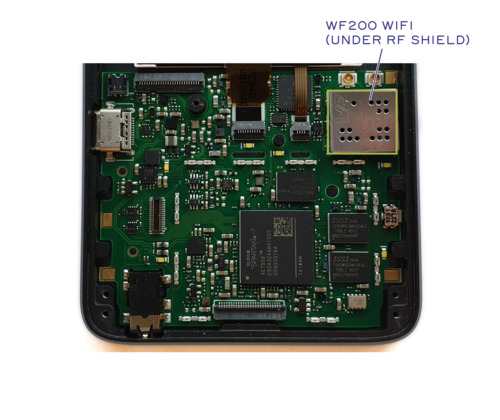

We also use a special kind of logic chip for the CPU – an FPGA – configured by the user, not the factory, to be exactly the CPU that the user specified. Crucially, most users have no evidence-based reason to trust that a CPU contains exactly what it claims to contain; few have the inspection capability to verify a chip in a non-destructive manner. On the other hand, with an FPGA, individual users can craft and inspect CPU bitstreams with readily available tools. Furthermore, the design can be modified and upgraded to incorporate countermeasures against hardware exploits discovered in the FPGA’s underlying fabric. In other words, the current trustability situation for an ASIC-style CPU is basically “I surrender”, whereas with an FPGA, users have the power to configure and patch their CPUs.

See my previous post for more details on how an FPGA helps solve the problem of transparency in CPU designs

Trustable hardware is only one facet of hardware security. Beyond correct construction, there are also questions of “has anyone tampered with the hardware while I wasn’t looking?”, and “can my hardware keep its secrets if it falls into the wrong hands?” These are the “tamper evidence” and “tamper resistance” problems, respectively. The rest of this post will drill down into these two questions as they relate to Precursor.

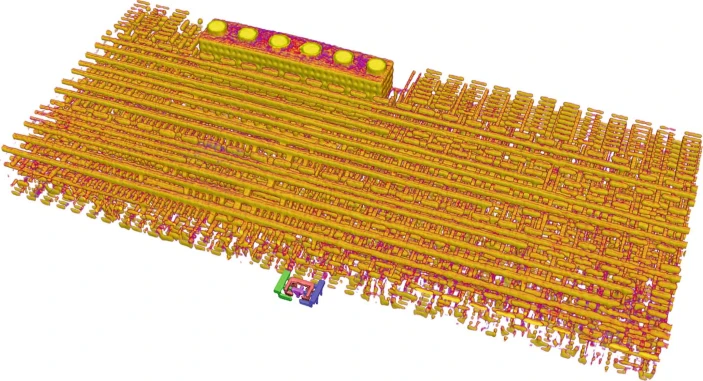

Despite any claims you may have heard otherwise, tamper resistance is a largely unsolved problem. Any secrets committed to a non-volatile format are vulnerable to recovery by a sufficiently advanced adversary. The availability of near-atomic level microscopy, along with sophisticated photon and phonon based probing techniques, means that a lab equipped with a few million dollars worth of top-notch gear and well-trained technicians has a good chance of recovering secret key material out of virtually any non-volatile storage media. The hard part is figuring out where the secrets are located on the chip. Once this is known for a given make and model, the attack is easy to repeat. This means the incremental cost of recovering keys from a previously studied design is in the ballpark of $10k’s and a matter of days. For some popular types of silicon packaging, such as flip chips, so-called “back side probing” techniques may be able to non-destructively extract secret key material within a matter of hours.

Ptychographic X-ray imaging as referenced in this article reveals the detailed 3-D structure of a modern chip.

Keys stored in volatile memory – that is, battery-backed RAM – can significantly up the ante because any disruption of power to the key memory can disrupt its data. Cryogenic freezing of the circuitry can help preserve the data for readout in the event of power loss, but battery-backed key storage can also incorporate active countermeasures to detect environmental anomalies and reactively zeroize the keys. While this sounds great for storing extremely sensitive secrets, it’s also extremely risky because false triggers can erase the keys, millions of dollars of cryptocurrency could vanish with a single false move. Thus volatile keys are best suited for securing highly sensitive but ephemeral communications and not for the long-term storage of high-value secrets.

Tamper evidence, not to be confused with tamper resistance, is the ability of a secured hardware device to show evidence of tampering. An example of very basic tamper evidence is the “warranty void if removed” sticker. It is typically placed over a screw essential to holding the case together, so that any attempt to disassemble the unit requires puncturing the sticker. This very basic measure is easily defeated by duplicating and replacing the sticker, and it’s not hard to acquire even the holographic stickers if you know the right people.

The next level up in tamper evidence is the incorporation of a physically unclonable feature in the tamper-evident seal, such as meauring the random pattern of fibers in the paper of the sticker, or recording the position of glittery bits embedded in nail polish applied over a seam. Of course, none of these physical features can attest to any evidence of side-channel attacks, which do not require opening the case. In the case of side channel attacks, adversaries can gain enough information about secrets contained within a piece of hardware by just observing patterns in the power consumption or in the spurious radio waves emitted from the hardware. Attackers can also induce transient faults with the hope of tricking a device into disclosing secrets without breaking through labels by exposing the hardware to high levels of radiation or temperature extremes. Depending on the threat model, the hardware may simply need to hamper such attacks, or it may need to react to them and take appropriate action depending upon the nature of the attack.

Because hardware security is hard, the lack of a known exploit should never be taken as the existence of strong security. We take this fact to heart in Precursor: in addition to putting effort into designing a trustable, secured hardware solution, we have also put effort into exploiting it. Unlike most other secure hardware vendors, we also tell you about the exploits in detail, so you can make an informed decision about the practical limits of our implementation’s tamper evidence and resistance.

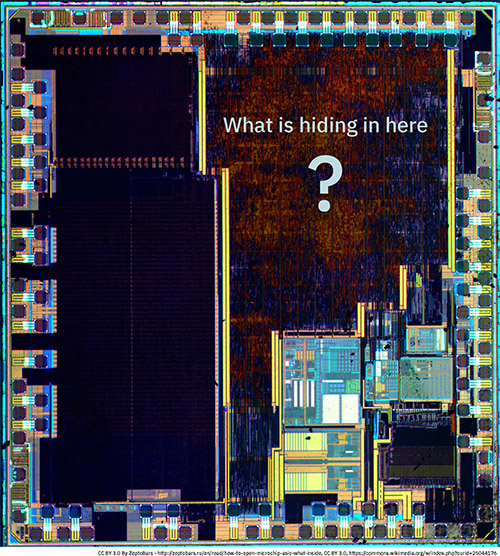

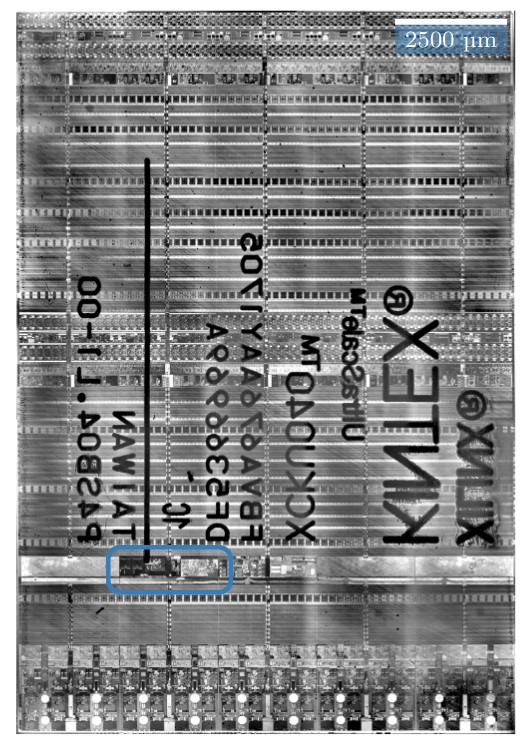

Precursor uses the Xilinx 7-series of FPGAs for its trusted root. This series of FPGAs is perhaps one of the most extensively studied from a security perspective; dozens of papers have been published about its architecture and vulnerabilities. As a result, its key stores have been reverse engineered and analyzed down to the transistor level.

An example of the analysis done by other researchers on the 7-Series FPGAs

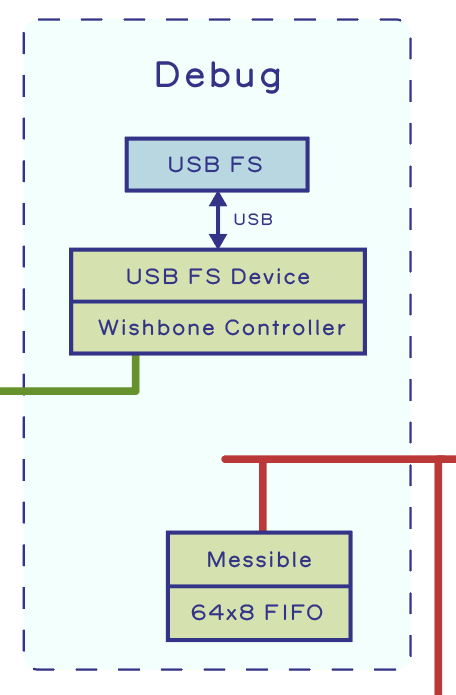

The most serious known vulnerability in the chip was published in early 2020, by Maik Ender, Amir Moradi, and Christof Paar in a paper titled “The Unpatchable Silicon: A Full Break of the Bitstream Encryption of the 7-Series FPGAs”. In a nutshell, it allows an attacker equipped with nothing more than the ciphertext of the bitstream and access to the FPGA’s JTAG port to recover the plaintext of a bitstream. It relies on a feature known as the WBSTAR register, normally used to recover from a corrupted boot. By design, it loads a recovery address from a location encrypted in the bitstream, and places the address in a register that is necessarily disclosed to the boot media to set a new loading location for the fallback image. Unfortunately, the “recovery address” is just a piece of data at the pointy end of an unchecked pointer. This means it can be used to fetch one word of plaintext from anywhere in the bitstream. The attack also takes advantage of the malleability of AES CBC blocks to adjust the WBSTAR load offset, thus allowing the FPGA to be used as an “oracle” to decrypt ciphertext one word at a time, without any knowledge of the encryption key.

The paper suggested a mitigation involving a pair of pins on the FPGA that change value based on the contents of the WBSTAR register to trigger a key reset (assuming you’ve stored the key in battery-backed RAM and not burned it permanently into the chip). While trying to implement the proposed mitigation, we found several ways to easily circumvent it. In particular, the external pin update is not automatic, it is done by an additional command later in the bitstream. By just omitting the pin updating command, we can render this mitigation ineffective without affecting the ability to read out the plaintext. We have published the code necessary to reproduce this attack in our jtag-trace github repository.

This means that the tamper resistance of Precursor is equivalent to the strength of the glue applied over the SPI ROM chip and the JTAG port. The glue on the SPI ROM chip hampers the recovery of the ciphertext, necessary for the attack, while gluing the JTAG port shut hampers the upload of exploit code. Furthermore, the ciphertext should be treated as a secret, as knowledge of it is necessary for recovery of the plaintext. Therefore, the FPGA should still be burned with an encryption key and set to only accept encrypted bitstreams. This is because JTAG readout of the SPI ROM ciphertext can only happen with the help of a bitstream specifically crafted for the purpose. As long as such a bitstream is not encrypted to the secret key inside the FPGA, the ciphertext should be unavailable through the JTAG port.

Based on this evaluation, we’re planning on implementing the circuitry necessary to zeroize RAM-backed encryption keys, but not for the purpose of directly mitigating this exploit. Unfortunately, a separate oversight in the security features of the 7-Series means devices that boot from BBRAM must also accept unencrypted bitstreams. Therefore access to ciphertext is much easier on BBRAM-keyed devices than on devices that boot from fused keys. However, by including the BBRAM key zeroization circuitry, users could at least have a choice of which type of key (battery-backed or fused) to use, depending on their risk profile. Battery-backed keys complicate any hardware attack by requiring the hardware to receive uninterrupted power as it is disassembled, in exchange for the risk of key loss in case the user forgets to keep the battery charged. Fortunately, the power needed to preserve the key is miniscule, lower than the self-discharge current of the battery itself. It’s also useful for users who wish to have a “self-destruct timer” or “panic button” to completely, securely, and irrevocably wipe their devices in a fraction of a second. This is useful for protecting high-value secrets that have a short or pre-determined lifetime.

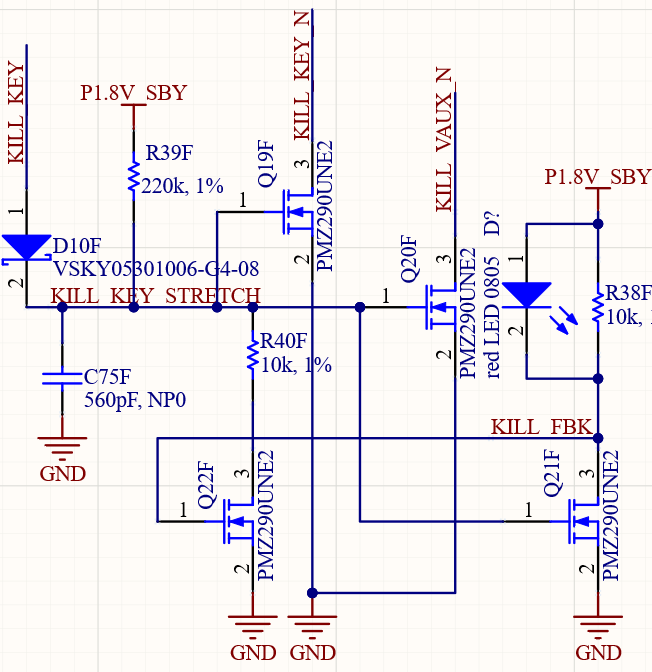

A schematic of Precursor’s proposed BBRAM key zeroization circuit. It is a pair of back-to-back NMOS-style inverters, which latches the system into a shutdown state until the battery is removed. There are additional circuits provisioned to rapidly discharge key-related voltage rails, not shown here.

Once Precursor has been glued shut, we propose the easiest method to recover the ciphertext and to gain access to the JTAG ports is to put the Precursor device into a precision CNC milling machine, mill out the PCB from the back side, and then place the remaining assembly into a pogo-pin based mechanism to perform the readout. This of course destroys the Precursor device in the process, but it is probably the most direct and reliable method of recovering the encryption keys, as it is very similar to an existing technique used for certain types of attacks on iPhones. Storing keys in BBRAM can greatly complicate the task of milling out the PCB by creating a high risk of accidental key erasure, but a sufficiently precise CNC with a non-conductive ceramic bit, or a precision laser-based ablation milling system can reduce the risk of key loss substantially. Cryogenic cooling of the FPGA chip itself may also help to preserve key material in the case of very short accidental power glitches.

CNC milling machines can be used to perform precision attacks on dense circuit boards. Above is a vendor demo of a CNC milling bit removing a NAND chip from an iPhone motherboard, without damage to adjacent components. The mill can be readily purchased for a couple thousand USD.

The level of equipment and skill required to execute such attacks is higher than available in a typical Makerspace or government field office, but could be made available within a specialized lab of any modern intelligence agency or large technology corporation. Of course, if Precursors became a popular method to store high-value secrets, an entrepreneurial hacker could also start a lucrative service to recover keys from devices with just a couple hundred thousand dollars in startup capital.

That being said, such an attack would likely be noticed. In other words, if your device is functional and its seals intact, your Precuror has probably not been tampered with. But, if it is confiscated or stolen, you can assume its secrets could be extracted in as little as a few hours by a well-prepared adversary. This is not ideal, but this barrier is still higher than countless other “secured systems” ranging from from game consoles to smartphones to crypto wallets that can be broken with nothing more than a data cable and a laptop. Of course, these “easy breaks” are typically due to a bug in the firmware of the device, but unlike most of these devices Precursor’s firmware is patchable, as well as being completely open for audit.

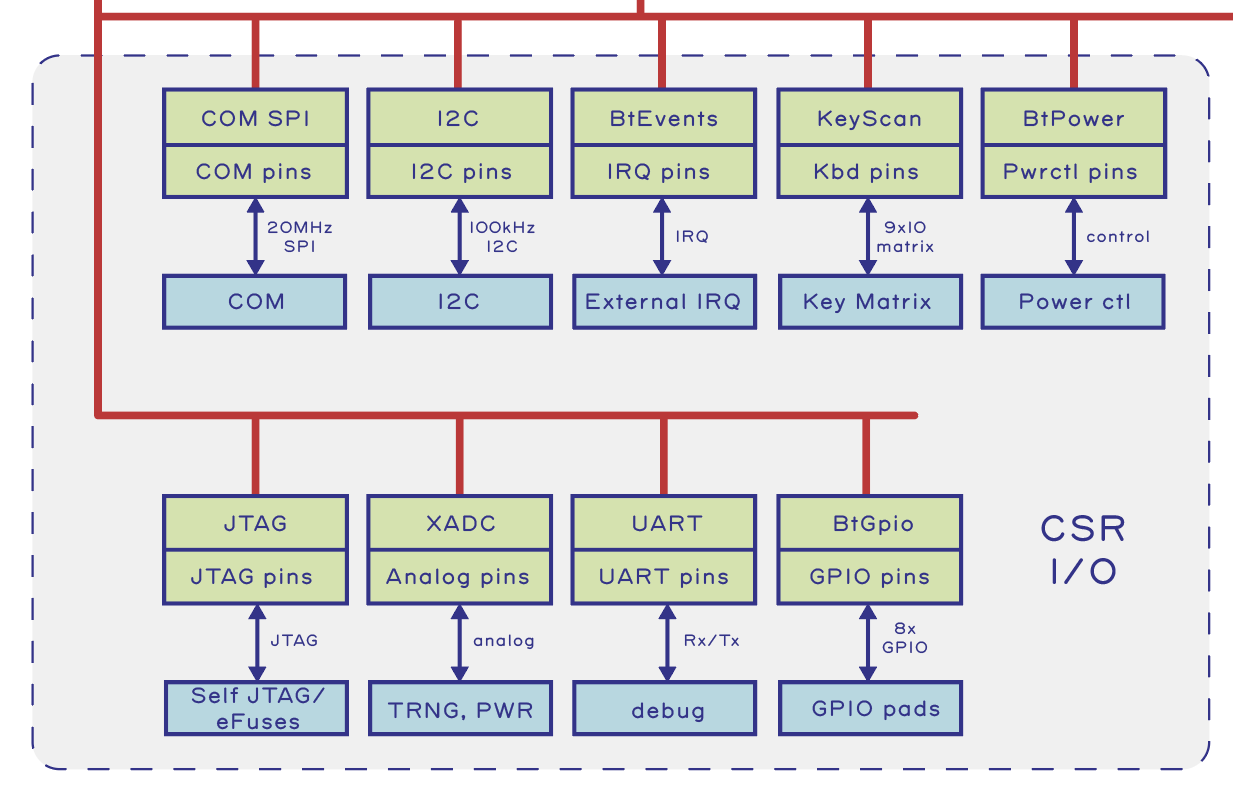

To assist users with gluing their Precursors shut, we include an easy-to-mix two-part epoxy in the box so that users may “pot” their boards once they have inspected their device and are satisfied it meets their standard for correct construction. Remember, we don’t seal the boards in the factory because that makes it impossible for users to confirm no back doors were inserted into the hardware. Upon completing inspection of the hardware, we recommend users perform the following actions to permanently seal their Precursor systems:

- Instruct the Precursor firmware to self-generate and burn an AES encryption key to the device, re-encrypt the FPGA bitstream to this key, and finally burn the fuse preventing boot from any other source.

- Disassemble the unit and snap the provided metal lid onto the motherboard. In addition to making it more difficult to physically access the hardware, it absorbs radio waves, thus making RF-based attacks more difficult.

- Hand-draw a memorable object or word onto a piece of tissue paper that is about 2.5 cm (1 inch) square.

- Use a cotton swab to drip the provided two-part clear epoxy through the holes of the RF shield, especially over the debug connector and SPI ROM.

- Lay the tissue paper over the wet epoxy, allowing the paper to become saturated with the epoxy.

- Wait 24 hours for the epoxy to dry, and re-assemble for use.

Precursor is designed to have the “Trusted” zone covered with a metal shield that can be glued down with epoxy.

The main risk of applying the epoxy is it leaking onto a connector that should not be glued down. The board is laid out to avoid that outcome, but caution is still needed during sealing. Pouring too much glue into the metal lid can result in glue leaking onto connectors that should not be sealed, preventing re-assembly. Notably, the sealing is done at the user’s risk; once a sealing attempt has been made, we can’t repair or replace a unit since the epoxy is unremovable by design.

The purpose of the tissue paper is to provide a simple but memorable way to confirm the uniqueness of your Precursor unit. It relies upon a human’s innate ability to recognize drawings or lettering made with their own hand. This method allows verification of the seal with simple visual inspection, instead of relying upon a third party tool to, for example, record and analyze the position of glitter dots or paper fibers for authenticity.

We provide Epotek 301 “Bi-packs” as the two-part epoxy for potting Precursor devices. It is commonly used for encapsulating optoelectronics and semiconductors such as LEDs and sensors. As such, it has excellent electrical properties, good optical clarity, robust mechanical durability, and a suitable viscosity for penetrating the nooks and crannies of small electronic parts. Once cured, any attempt to physically access the electronics contained within will leave some kind of mark as evidence of the attempt.

The Precursor device has a well-characterized hardware security level. We’ve designed the hardware to be easier to inspect and seal, so you have an evidenced-based reason to trust the hardware. As all devices are vulnerable to hardware tampering, we’ve taken the approach of preferring devices with well-characterized vulnerabilities. We picked the Xilinx 7-Series FPGAs in part because it has been studied for years: its fuse boxes and encryption engine have been analyzed down to the gate level and there have been no findings so far reporting special access modes for law enforcement, undocumented shadow key stores, or other bugs that could lead to fully remote exploits or back doors. We’ve also taken the time to reproduce and disclose the known vulnerabilities, so that you don’t have to trust our statements about the security of the hardware: you’re empowered to reproduce our findings and make your own evidence-based findings about the trustworthiness of Precursor. In parallel with Precursor, the Betrusted project aims to raise the bar even higher, but doing so will require fabricating a custom ASIC, which incidentally carries its own set of verifiability and supply chain risks that we hope to address with countermeasures that are beyond the scope of this post.

In the end, there is no such thing as perfect security, but we firmly believe that the best mitigation is an evidence-based ecosystem built around the principles of openness, transparency, and lots and lots of testing. If you’re interested in participating in our ecosystem, please visit the Precursor crowdfunding page.